Shuttle Robot Stakeholder

Storyboard Text

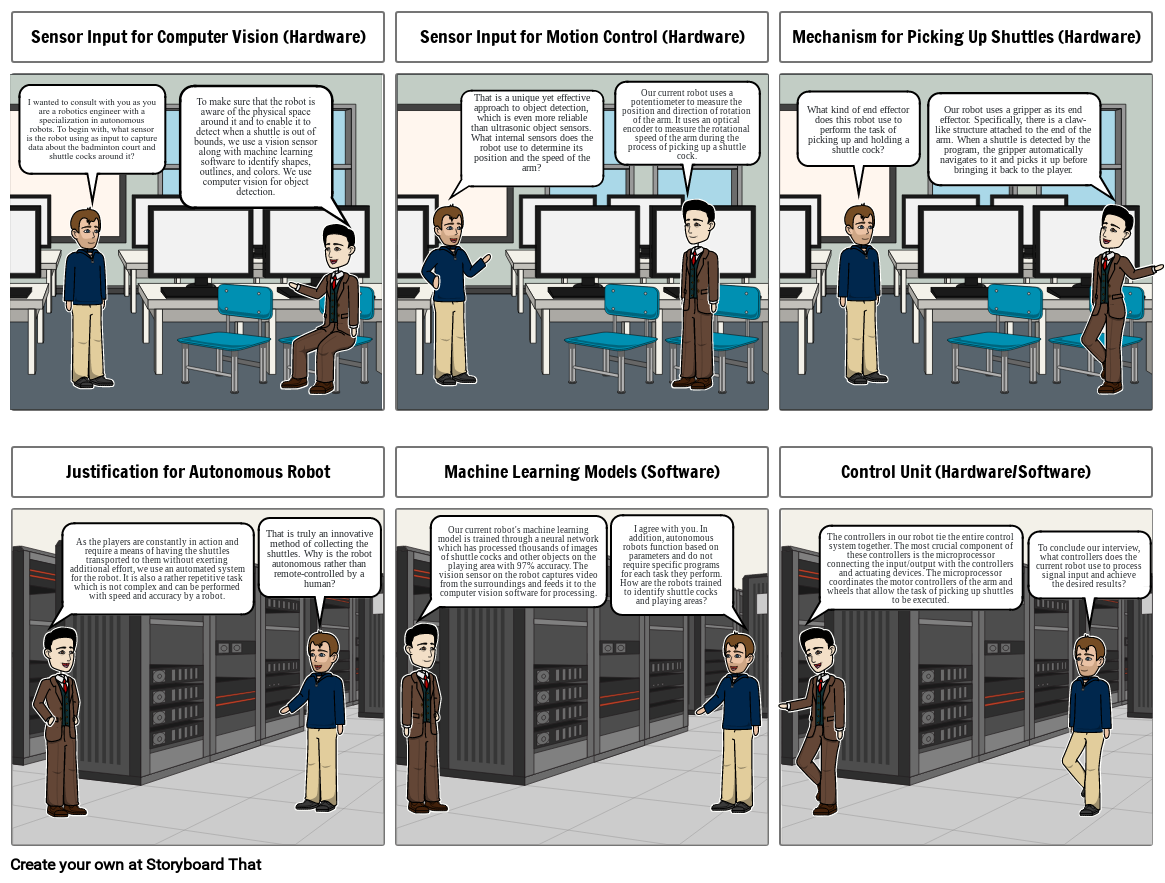

- Sensor Input for Computer Vision (Hardware)

- I wanted to consult with you as you are a robotics engineer with a specialization in autonomous robots. To begin with, what sensor is the robot using as input to capture data about the badminton court and shuttle cocks around it?

- To make sure that the robot is aware of the physical space around it and to enable it to detect when a shuttle is out of bounds, we use a vision sensor along with machine learning software to identify shapes, outlines, and colors. We use computer vision for object detection.

- Sensor Input for Motion Control (Hardware)

- That is a unique yet effective approach to object detection, which is even more reliable than ultrasonic object sensors. What internal sensors does the robot use to determine its position and the speed of the arm?

- Our current robot uses a potentiometer to measure the position and direction of rotation of the arm. It uses an optical encoder to measure the rotational speed of the arm during the process of picking up a shuttle cock.

- Mechanism for Picking Up Shuttles (Hardware)

- What kind of end effector does this robot use to perform the task of picking up and holding a shuttle cock?

- Our robot uses a gripper as its end effector. Specifically, there is a claw-like structure attached to the end of the arm. When a shuttle is detected by the program, the gripper automatically navigates to it and picks it up before bringing it back to the player.

- Justification for Autonomous Robot

- As the players are constantly in action and require a means of having the shuttles transported to them without exerting additional effort, we use an automated system for the robot. It is also a rather repetitive task which is not complex and can be performed with speed and accuracy by a robot.

- That is truly an innovative method of collecting the shuttles. Why is the robot autonomous rather than remote-controlled by a human?

- Machine Learning Models (Software)

- Our current robot's machine learning model is trained through a neural network which has processed thousands of images of shuttle cocks and other objects on the playing area with 97% accuracy. The vision sensor on the robot captures video from the surroundings and feeds it to the computer vision software for processing.

- I agree with you. In addition, autonomous robots function based on parameters and do not require specific programs for each task they perform. How are the robots trained to identify shuttle cocks and playing areas?

- Control Unit (Hardware/Software)

- The controllers in our robot tie the entire control system together. The most crucial component of these controllers is the microprocessor connecting the input/output with the controllers and actuating devices. The microprocessor coordinates the motor controllers of the arm and wheels that allow the task of picking up shuttles to be executed.

- To conclude our interview, what controllers does the current robot use to process signal input and achieve the desired results?

Over 30 Million Storyboards Created

No Downloads, No Credit Card, and No Login Needed to Try!